Authors: Trapit Bansal, Rishikesh Jha, Tsendsuren Munkhdalai, Andrew McCallum

Paper reference: https://aclanthology.org/2020.emnlp-main.38.pdf

Contribution

This paper proposes a self-supervised approach SMLMT (Subset Masked Language Modeling Tasks) which generates a large and diverse meta-learning task distribution from unlabeled sentences. It enables training of meta-learning methods for NLP at a large scale while also ameliorating the risk of over-fitting to the training task distribution. This meta-training leads to better few-shot generalization to novel/unseen tasks than pre-trained models (such as BERT) followed by fine-tuning.

Details

Pre-training v.s. Meta-learning

Large scale pre-training suffers from a train-test mismatch as the model is not optimized to learn an initial point that yields good performance when fine-tuned with few examples. Fine-tuning of a pre-trained model typically introduces new random parameters, which are hard to estimate robustly from the few examples.

Self-supervised Tasks for Meta-learning (SMLMT)

Limitations of meta-learning in NLP:

- Large classification datasets with large label spaces are not readily available for all NLP tasks.

- Meta-learning approaches in NLP trains to generalize to new labels of a specific task, but doesn’t generalize to novel tasks.*

SMLMT

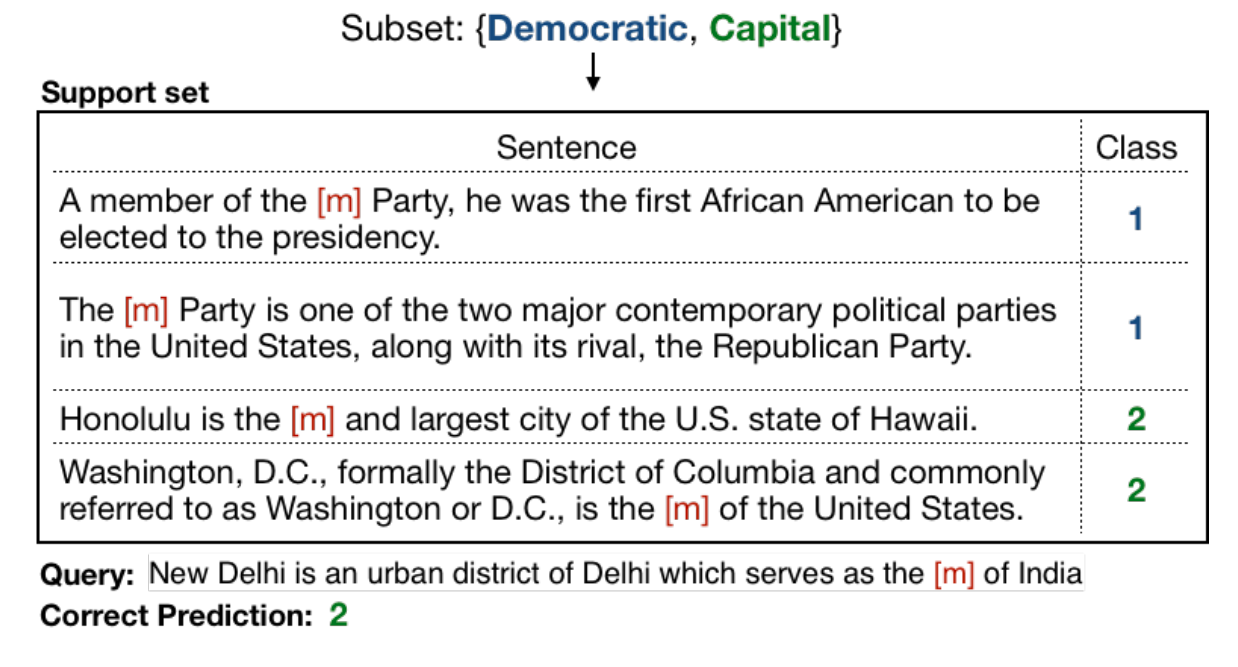

Each SMLMT task is defined from a subset of vocabulary words. It consists of following steps to create one $N$-way classification task:

(1) Randomly select $N$ unique vocabulary words;

(2) Consider all sentences containing these $N$ words, and for each word randomly sample $k$ and $q$ sentences for the support set and query set.

(3) Mask the corresponding chosen word from the sentences with the mask token $[m]$ in each of these $N$ sets;

(4) Assign labels ${1, …, N}$ to these classes and ignore original masked words.

The task is to predict a label for each sentence (unlike MLM which is a word-level classification task). The way of creating tasks like this enables large-scale meta-learning from unsupervised data.

Hybrid SMLMT combined with supervised tasks to encourage better feature learning and increase diversity in tasks for meta-learning. In each episode select an SMLMT task with probability $\lambda$ or a supervised task with probability $(1 − \lambda)$. The use of SMLMT jointly with supervised tasks ameliorates meta-overfitting.

Model

The paper uses the MAML algorithm to meta-learn an initial point that can generalize to novel NLP tasks, with a bit modification on the model so that it can handle diverse number of classes. Text encoder is BERT.

Takeaways

Few-shot generalization to unseen tasks

The paper evaluates performance on novel tasks not seen during training. Without using supervised data, meta-trained SMLMT performs better than fine-tuning BERT, specially for small number of examples (<16). With supervised data, Hybrid-SMLMT outperform all baselines by a large margin.

Ameliorate meta-overfitting

Learned learning rates converge towards large non-zero values for most layers under Hybrid-SMLMT setup, which indicates that SMLMT helps in ameliorating meta-overfitting.

Learned Representation

Hybrid-SMLMT model is closest to the initial point after task-specific fine-tuning, indicating a better initialization point. Representations in lower layers are more similar before and after fine-tuning, and less in the top few layers across models.