Authors: Xinyu Hua, Lu Wang

Paper reference: https://aclanthology.org/2020.emnlp-main.57.pdf

Contribution

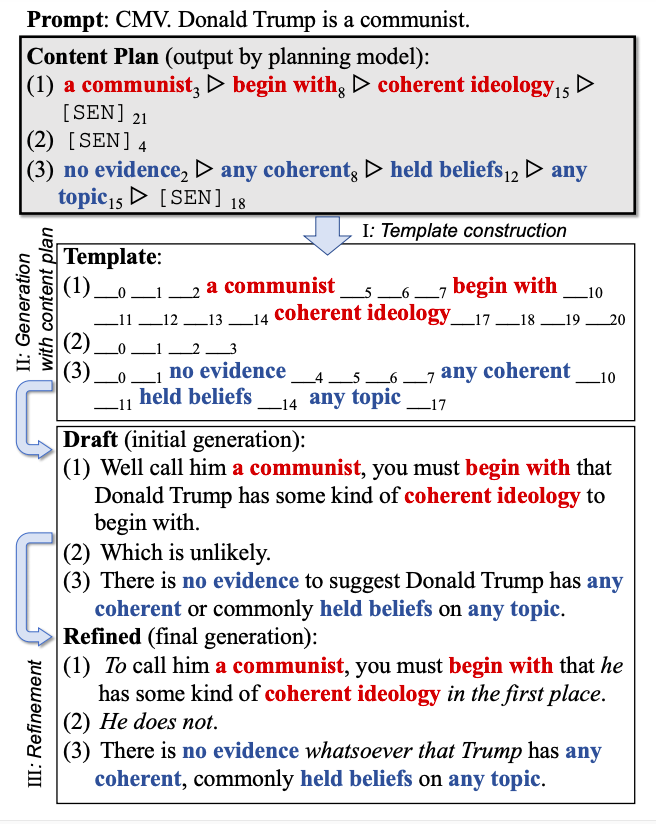

This work presents a novel content-controlled text generation framework, PAIR, with planning and Iterative refinement. It focuses on generating more relevant and coherent text by incorporating content plans into models.

The paper first designs a content planning model trained from BERT to automatically construct the initial content plan (template), which assigns keyphrases to different sentences and predicts their positions. Then it proposes a refinement algorithm to gradually improve the generation quality with BART by masking and filling updated templates.

The refinement algorithm can steadily boost performance and improves both content and fluency.

Details

Task formulation: Given a sentence-level prompt and a set of keyphrases relevant to the prompt, the task is to do a long-form text generation that contains multiple sentences which reflect the keyphrases in a coherent way.

Content Planning with BERT

The content planning model is trained from BERT to assign keyphrases to different sentences and predict their corresponding positions.

Adding Content Plan with a Template Mask-and-Fill Procedure

Convert the content plan into a template. For each sentence, the assigned keyphrases are placed at their predicted positions, and empty slots are filled with [MASK] tokens. The decoder then generate an output by filling these mask.

Iterative Refinement

At each iteration, the least confident tokens are replaced with [MASK] to serve as the next iteration’s template.