Authors: Ohad Rubin, Jonathan Herzig, Jonathan Berant

Paper reference: https://arxiv.org/pdf/2112.08633.pdf

Contribution

This paper proposes an efficient method for retrieving prompts. It uses LMs to label examples that can serve as good prompts, and train a prompt retriever from this signal.

Details

Prompt Retrieval

Given a training set $\mathcal{D}=\{(x_{i}, y_{i})\}_{i=1}^{n}$ of input-output sequences, and a test example $x_{test}$, the goal is to train a retriever model, $R\left(x_{\text{test}}, \mathcal{D}\right)$, that will retrieve a subset of training examples $\mathcal{P}=\{\left(x_{j}, y_{j}\right)\}_{j=1}^{m} \subset \mathcal{D}$, where $m \ll n$ as prompt.

Train the Efficient Prompt Retriever (EPR)

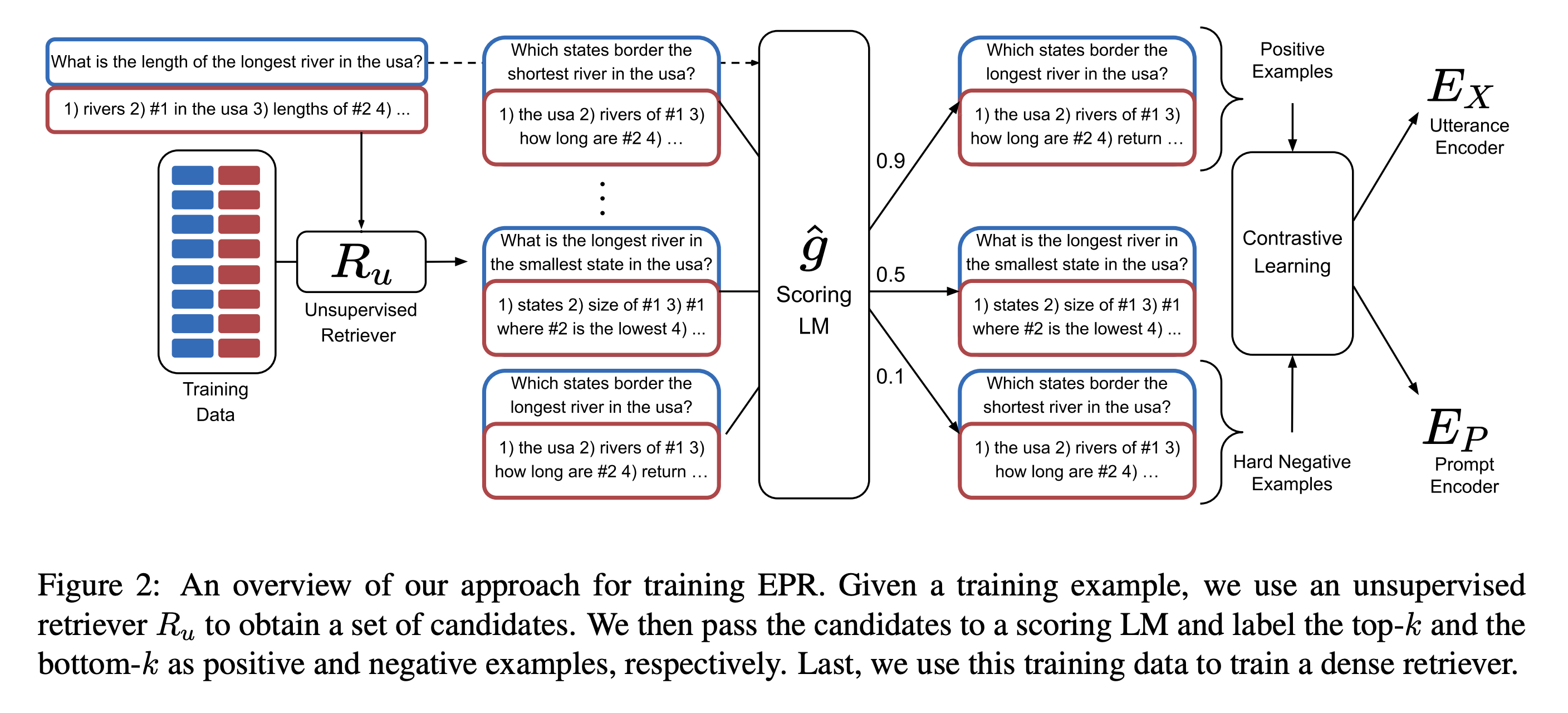

The main job of this paper is to train an Efficient Prompt Retriever (EPR) to find training examples that can serve as good prompts for other training examples.

Overview

- For each training example $(x, y)$, retrieve a set of candidate training examples $\overline{\mathcal{E}}={\bar{e}_{1}, \cdots, \overline{e_{L}}}$.

- Score each candidate $\bar{e}_{l} \in \overline{\mathcal{E}}$ independently with a scoring LM $\hat{g}$ with $$s\left(\bar{e}_{l}\right)=\operatorname{Prob}_{\hat{g}}\left(y \mid \bar{e}_{l}, x\right).$$

- Label training examples that lead to high probability as positive examples and low probability as negative examples.

- Train a prompt retriever from this data using contrastive learning.

Candidate Retrieval

Since scoring all pairs of training examples is computationally prohibitive, a candidate retriever is necessary to scale down high-quality candidates first (select 50 in the paper).

Experiments show that the unsupervised retriever BM25, a sparse retriever that relies on surface text similarity (an extension of TF-IDF), is an efficient model to select candidates.

Scoring LM

When we cannot access inference time LM $g$’s weights and only use it as a service (for huge language models like GPT-3, etc.), we can use a smaller LM $\hat{g}$ to train a much lighter-weight retriever that is only tasked with learning a similarity function.

Positive/Negative Examples

Positive examples $\mathcal{E}_{pos}$ includes the top-$k$ candidates in $\overline{\mathcal{E}}$ according to $s(\bar{e}_{l})$; negative examples $\mathcal{E}_{neg}$ includes the bottom-$k$ candidates in $\overline{\mathcal{E}}$ according to $s(\bar{e}_{l})$. [$k=5$]

These will be hard negatives since all examples are from high-quality (most-similar) candidates.

Train EPR

The general idea is to train two BERT-base encoders. $E_X(\cdot)$ encodes $x$, and $E_{P}(\cdot)$ encodes positive/negative examples. The goal is to learn encodings through contrastive learning to tell the difference between positive and negative examples. The similarity score is defined as the inner product of $E_X(\cdot)$ and $E_{P}(\cdot)$.

EPR Inference

First, encode entire set of training examples with $E_{P}(\cdot)$, since they are all treated as potential prompt for some $x_{test}$. Then, encode $x_{test}$ with $E_X(\cdot)$ and find most similar examples by maximum inner-product search over the training data. Finally, concatenate them together and feed into LM $g$.