Authors: Sam Wiseman, Stuart M. Shieber, Alexander M. Rush

Paper reference: https://arxiv.org/pdf/1808.10122.pdf

Contribution

This paper proposes a data-driven neural generation system with a neural hidden semi-markov model (HSMM) decoder to generate template-like text. More specifically, HSMM learns latent, discrete templates while learning to generate. These templates make generation both more interpretable and controllable, and the performance is close to seq2seq generation models.

The proposed model is able to explicitly force the generation to use a chosen template, which is automatically learned (latent) from training data while leaving the database x constant.

Details

Task Definition

This paper tackles a problem of generating an adequate and fluent text description of a database $x$ with a collection of records.

HSMM

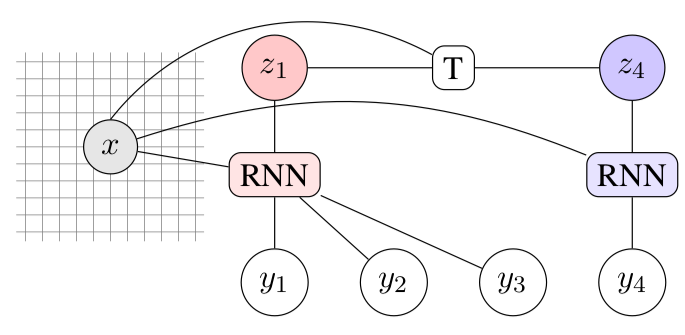

Hidden semi-markov model (HSMM) models latent segmentations in an output sequence. In HSMM, emissions may last multiple time-steps. The emission model models the generation of a text segment conditioned on a latent state and source information. It allows interdependence between tokens (but not segments) by having each next-token distribution depend on all the previously generated tokens.

Extract Templates

Templates are useful here because they make it clear what part of the generation is associated with which record in the knowledge base. Every segment in the generated $y$ is typed by its corresponding latent variable.

Given a database $x$ and reference generation $y$, hidden variables $z$ can be obtained through Maximum a posteriori (MAP) assignments in a way similar to HMM. These assignments generates a typed segmentation of $y$ and text-segments can be associated with discrete labels $z$ that frequently generate them. Most common sequence of hidden states $z$ after segmentations will be collected from the training data and become templates. These templates can be used to affect the wordordering, as well as which fields are mentioned in the generated text.