Authors: Meng Cao, Yue Dong, Jackie Chi Kit Cheung

Paper reference: https://arxiv.org/pdf/2109.09784.pdf

Contribution

This paper proposes an approach that can detect and separate factual from non-factual hallucinations of entities in abstractive summarization based on an entity’s prior and posterior probabilities according to MLMs.

Experiments show that more than half of the hallucinated entities are factual with respect to the source document and world knowledge, and the knowledge of many factual hallucinations comes from the training set.

Note: The paper focuses on extrinsic hallucinations only.

Details

Problem Formulation

For an entity $e_k$ in a generated summary $G$, the task is to determine whether $e_k$ is hallucinated, and whether it is factual.

The Prior & Posterior Probability of an Entity

Prior probability: the probability of an entity $e_k$ being in a summary $G$ without considering the source document $S$. It’s measured by a MLM (BART-Large), where $c_k = G \setminus e_k$:

$$ \begin{aligned} p_{\text {prior }}\left(e_{k}\right) &=P_{\text {MLM }}\left(e_{k} \mid c_{k}\right) \\ &=\prod_{t=1}^{\left|e_{k}\right|} P_{\text {MLM }}\left(e_{k}^{t} \mid e_{k}^{1 \ldots . t-1}, c_{k}\right) \end{aligned} $$

Posterior Probability: the probability of an entity being in a summary considering the source document. It’s measured by a conditional MLM (CMLM) using BART-Large.

$$ p_{\text {pos }}\left(e_{k}\right)=P_{\mathrm{CMLM}}\left(e_{k} \mid c_{k}, S\right) $$

Training for CMLM

CMLM is trained on XSum or CNN/Dailymail. For each reference summary, randomly select one entity and mask it with a special [MASK] token. The training target is the reference summary without any masking given the document and the masked summary as input.

Training a Discriminator

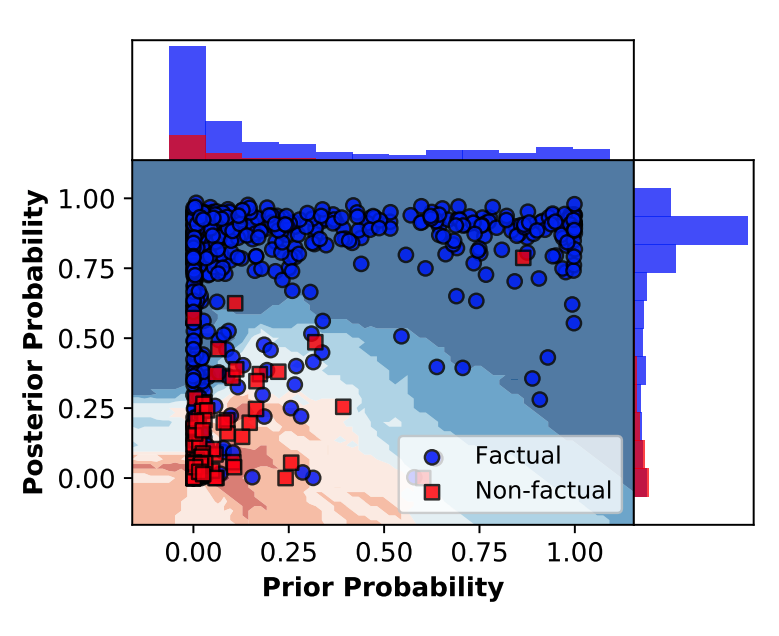

The paper trains a KNN classifier (k=15 in the paper), using the prior and posterior probabilities, plus a binary overlap feature that indicates whether the entity appears in the document.

Here is a visualization of trained KNN with bounds:

Rules for Determine Hallucination

(1) If the entity can be directly entailed from the source document, then the entity is non-hallucinated;

(2) Otherwise, decide whether the entity is factual using world knowledge;

(3) If there is no information found to prove or disprove the hallucinated entity, it is labeled as non-factual (discarded all intrinsic hallucinations and with a focus on external hallucinations).