Authors: Feng Nan, Cicero Nogueira dos Santos, Henghui Zhu, Patrick Ng, Kathleen McKeown, Ramesh Nallapati, Dejiao Zhang, Zhiguo Wang, Andrew O. Arnold, Bing Xiang

Paper reference: https://aclanthology.org/2021.acl-long.536.pdf

Contribution

This paper proposes an efficient automatic evaluation metric QUALS to measure factual consistency of a generated abstractive summary and designs a contrastive learning algorithm to directly optimize the proposed metric while training without using Reinforce algorithm.

Both automatic metrics and human evaluations show that the summaries generated using designed algorithm have better quality and are more factually consistent compared to the ones generated by current SOTA summarization model (BART-large).

Details

Background

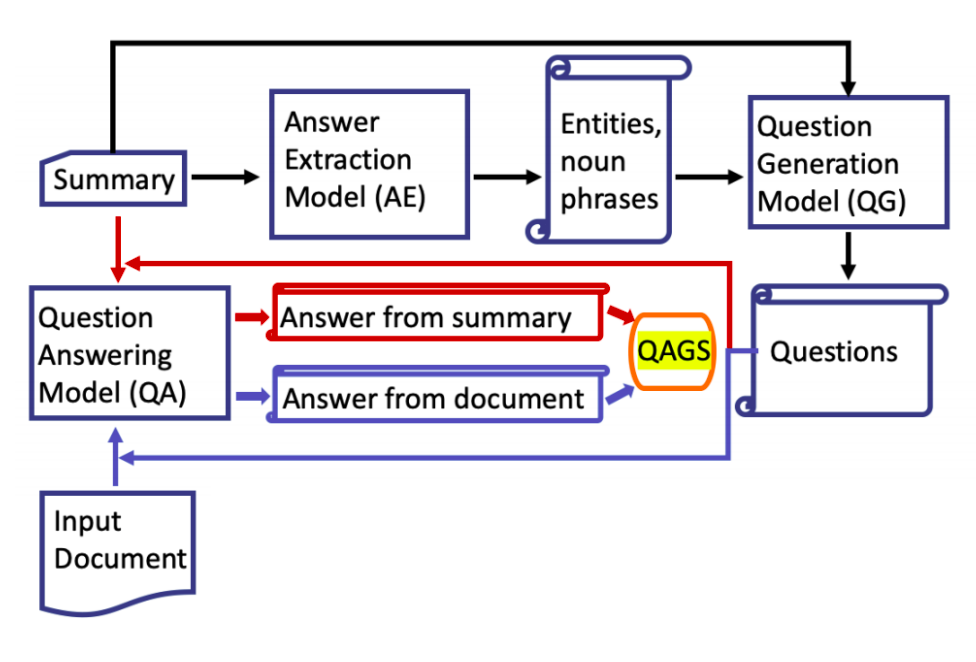

Automatic Evaluation Protocol QAGS

Previous work proposed QAGS (Question Answering and Generation for Summarization), an interpretable evaluation protocol designed to identify factual inconsistencies in a generated summary given the source document and the summary.

It consists of four steps:

(1) extracts named entities and noun phrases in the summary as candidate answers using an answer extraction model;

(2) a question generation model takes in the summary, concatenating with each candidate answer to generate a corresponding question;

(3) a QA model is used to answer each generated question in the context of the summary and the input document, separately;

(4) the answers from the QA model based on the summary and the input document are compared using word-level overlap.

This paper discusses some drawbacks of this metric, mostly about computation inefficiency since it involves three separate models.

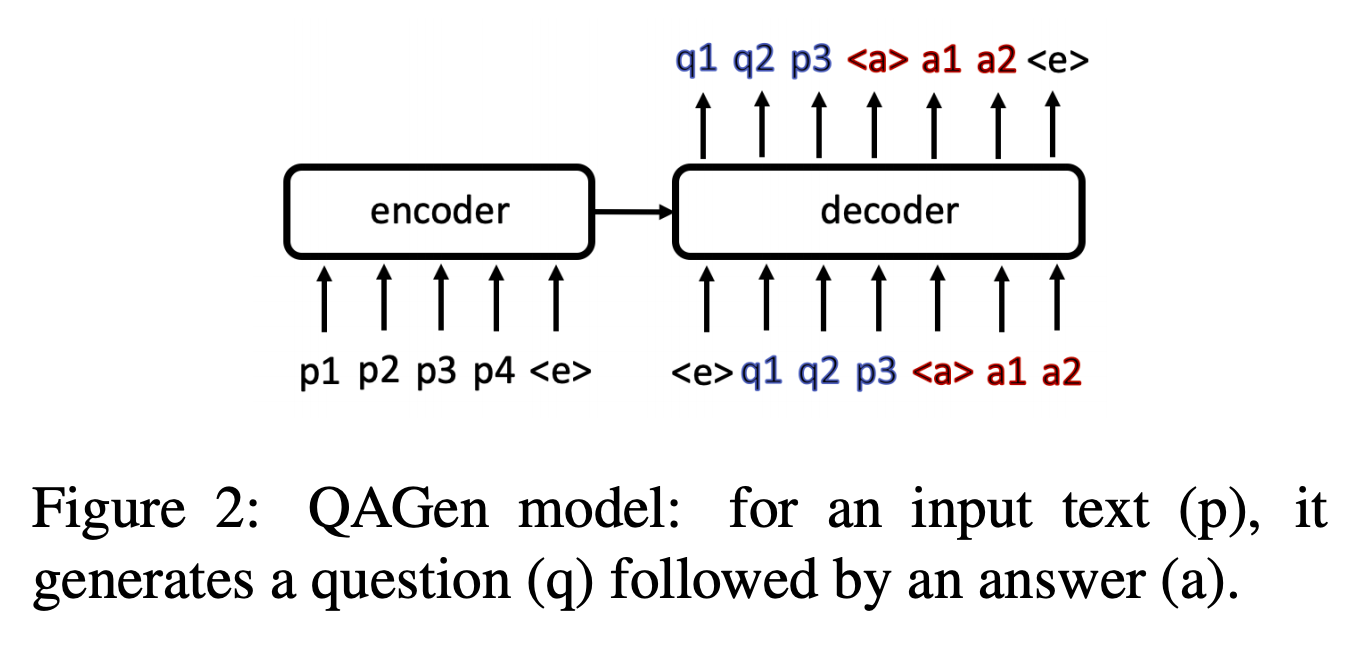

QAGen

QAGen is an end-to-end model capable of generating both questions and answers from a summary.

Automatic Evaluation Protocol QUALS

This paper proposes a metric QUALS (QUestion Answering with Language model score for Summarization) to measure factual consistency.

(1) It first employs a QAGen model (using BART) to generate diverse question-answer pairs from a summary and picks high-quality ones.

(2) Then the paper evaluates the likelihood that the QAGen model generates the same question-answer pairs given the source document.

Roughly speaking, QUALS score is defined as the degree that the same question-answer pairs can be generated by taking either the source document or the summary as input.

Experiments show that QUALS correlates very well with QAGS.

CONtrastive SEQ2seq learning (CONSEQ)

The paper further designs a contrastive seq-to-seq learning algorithm (CONSEQ), which can maximize the proposed factual consistency metic during training phase without using Reinforce algorithm.

CONSEQ

After normal fine-tuning on training data, the model (using BART) goes through a round of training using newly constructed contrast set.

The new dataset is constructed by selecting high quality factual consistent/inconsistent summaries based on their QUALS scores from the training data. The model is trained to maxmize/minimize the likelihood of generating these factual consistent/inconsistent summaries.