Authors: Zi-Yi Dou, Pengfei Liu, Hiroaki Hayashi, Zhengbao Jiang, Graham Neubig

Paper Reference: https://arxiv.org/pdf/2010.08014.pdf

Contribution

This paper proposes a guided summarization framework (GSum) that can constrain the abstractive summarization by taking different types of guidance signals (automatic extracted or user-specified guidance) as input. Experiments show that the learned model does learn to depend on the guidance signals, and the generate text is faithful and has more novel n-grams. The framework works better when the dataset is more extractive.

The paper investigates four types of guidance signals and shows that they are complementary to each other. There is potential to aggregate their outputs together and obtain further improvements.

Details

Model

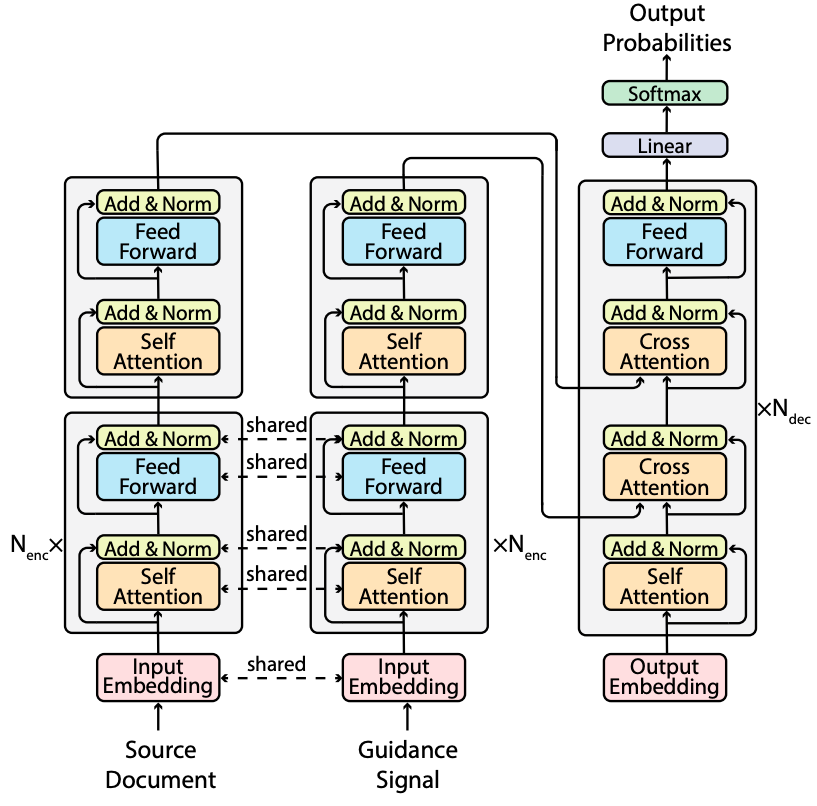

The framework builds upon a modification of BART, which consists of two encoders that respectively encodes the input source document and guidance signals.

$$ \begin{aligned} &\mathbf{x}=\mathrm{LN}(\mathbf{x}+\operatorname{SelfAttn}(\mathbf{x})), \\ &\mathbf{x}=\mathrm{L N}(\mathbf{x}+\text {FeedForward}(\mathbf{x})). \end{aligned} $$

The decoder attends to both the source document and guidance signal. The guidance signal will inform the decoder which part of the source documents should be focused on:

$$ \begin{aligned} &\mathbf{y}=\mathrm{LN}(\mathbf{y}+\operatorname{SelfAttn}(\mathbf{y})), \\ &\mathbf{y}=\mathrm{LN}(\mathbf{y}+\operatorname{CrossAttn}(\mathbf{y}, \mathbf{g})), \\ &\mathbf{y}=\mathrm{LN}(\mathbf{y}+\operatorname{CrossAttn}(\mathbf{y}, \mathbf{x})), \\ &\mathbf{y}=\mathrm{LN}(\mathbf{y}+\text {FeedForward}(\mathbf{y})) . \end{aligned} $$

Guidance

$$ \arg \max_{\theta} \sum_{\left\langle\mathbf{x}^{i}, \mathbf{y}^{i}, \mathbf{g}^{i}\right\rangle \in\langle\mathcal{X}, \mathcal{Y}, \mathcal{G}\rangle} \log p\left(\mathbf{y}^{i} \mid \mathbf{x}^{i}, \mathbf{g}^{i} ; \theta\right) $$

Guidance can be defined as some type of signal $g$ that is fed into the model in addition to the source document $x$. There are two ways to define guidance signal:

(1) Oracle extraction. Use both $x$ and $y$ to deduce a signal $g$ that is most likely useful in generating $y$.

(2) Automated prediction/extraction. Use an automated system (BertExt, BERTAbs) to infer the guidance signal $g$ from input $x$.

The paper investigate the following four types of guidance signal (Oracle extraction at training time, but automatic extracted or user-specified guidance at test time):

- Highlighted Sentences. Select salient sentences from the source document.

- Keywords. Select salient keywords from highlighted sentences.

- Relations. Select salient relational tuples (subject, relation, object).

- Retrived Summaries. Select most similar source documents/summaries.

The use of oracle guidance at training time has a large advantage of generating guidance signals that are highly informative, thus encouraging the model to pay more attention to them at test time.

Experiements show that using highlighted sentences as guidance achieves the best performance.