Authors: Kaj Bostrom, Xinyu Zhao, Swarat Chaudhuri, Greg Durrett

Paper reference: https://arxiv.org/abs/2104.08825

Contribution

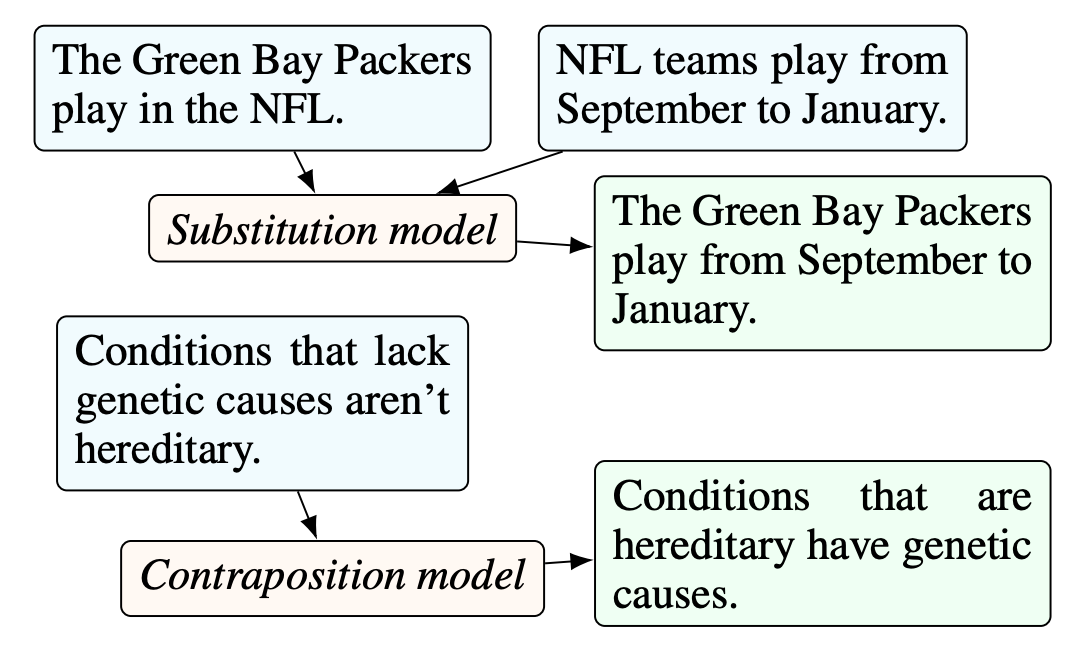

This paper proposes an automated data generation method, ParaPattern, for building models capable of deductive reasoning and generating logical transformations (in particular substitution and contraposition) from diverse natural language inputs (premise statements) in an unsupervised way. Experiments show that the proposed data generation method leads to robust operation models capable of generating consistent logical transformations over a diverse range of natural language inputs.

Details

Data Collection

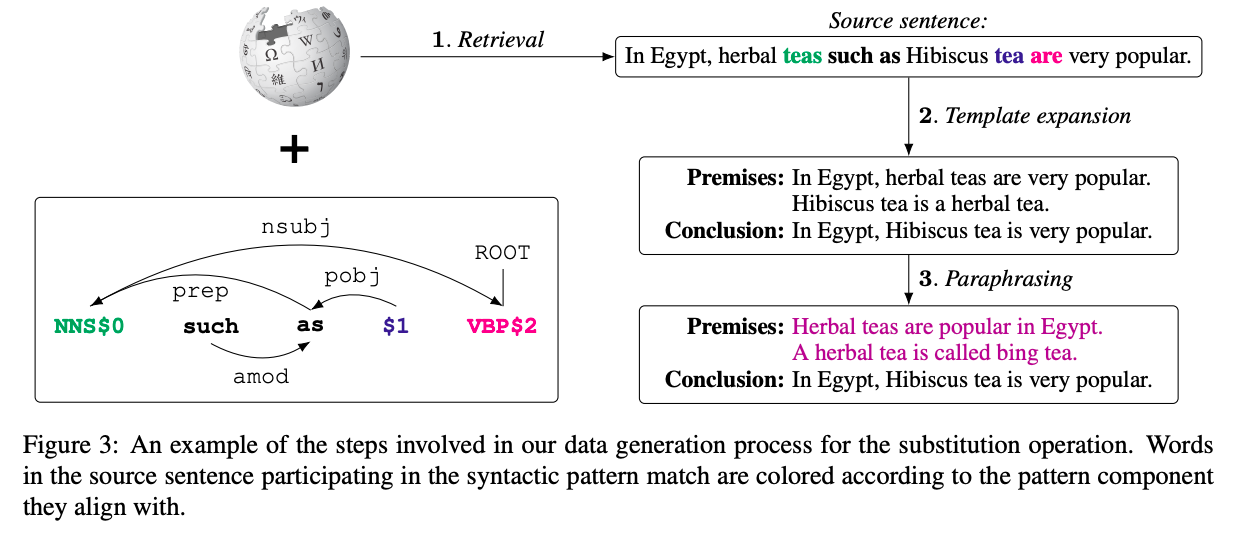

The proposed method ParaPattern crafts data with lexical variation and diversity based on

(1) syntactic retrieval from Wikipedia;

(2) templated-based example construction;

(3) and a pretrained paraphrasing model.

Once data for an operation has been generated, the paper uses it to fine-tune a BART-Large model.

Source Scraping

Retrieve source sentences suitable for template expansion from Wikipedia article text. The paper uses six pattern variations to gather source sentences for the substitution template and two patterns for the contraposition template.

Template expansion

The template expansion algorithm expands source sentences into generated examples by breaking out dependency subtrees rooted at each match variable and rearranging them according to the template structure.

Paraphrase Augmentation

Paraphrase template expanded input sentences with a PEGASUS model fine-tuned for paraphrasing.

Paraphrasing input sentences automatically adds a denoising component to the fine-tuning objective, resulting in more robust models and introduces lexical variation in the inputs which may not be captured by the template matches.