Authors: Esin Durmus, He He, Mona Diab

Paper reference: https://aclanthology.org/2020.acl-main.454.pdf

Contribution

This paper address the problems of evaluating faithfulness of a generated summary given its source document. It finds the trade-off between abstractiveness and faithfulness of a summary and proposes an automatic Question Answering based metric, FEQA, to evaluate faithfulness, which correlates better with human judgements. Later it points out some limitations of QA-based evaluation and suggests to still rely on human-in-the-loop system while evaluating the quality of summaries.

Details

The Abstractiveness-Faithfulness Tradeoff

This work evaluates the faithfulness of generated summary through CNN/DM (more extractive) and XSum (highly abstractive) datasets.

Experiments show that, on both datasets, outputs having less word overlap with the source document are more likely to be unfaithful and the number of unfaithful sentences increases as the summary becomes more abstractive.

FEQA: Faithfulness Evaluation with Question Answering

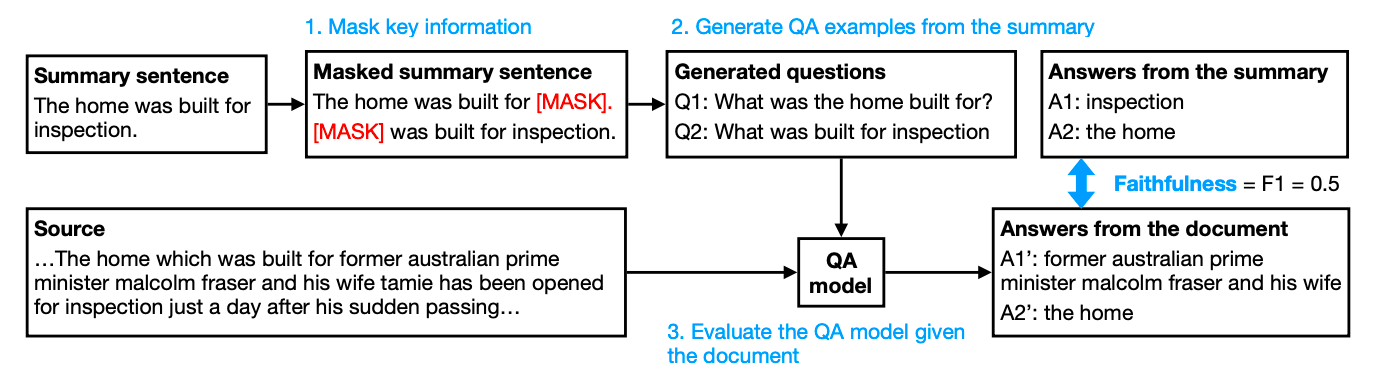

Given a summary sentence and its corresponding source document, important text spans (e.g. noun phrases, entities) in the summary are masked using a constituency parser and an NER model. Then, each span is considered as the answer and generate its corresponding question using a BART model. Lastly, a QA model finds answers to these questions in the documents. More matched answers from the document implies a more faithful summary.

Metric comparison

To evaluate content selection (e.g. ROUGE, BERTScore): compare the content of the generated summary and the content of the reference summary.

To evaluate faithfulness (e.g. FEQA): compare the generated summary and the source document.

The correlation between ROUGE, BERTScore and learned entailment models with human judgements of faithfulness drop significantly on highly abstractive summaries. These metrics rely more on lexical/concept overlap rather than the content overlap. The proposed metric FEQA has much higher correlation instead.