Authors: Yuexiang Xie, Fei Sun, Yang Deng, Yaliang Li, Bolin Ding

Paper reference: https://arxiv.org/pdf/2108.13134.pdf

Contribution

The paper proposes a Counterfactual Consistency (CoCo) metric to evaluate the factual consistency in text summarization via counterfactual estimation by formulating the causal relationship among the source document, the generated summary, and the language prior. In particular, the goal is to remove the effect of language prior, which can cause hallucination, from the total causal effect on the generated summary. The proposed metric provides a simple yet effective way to evaluate consistency without relying on other auxiliary tasks such as textual entailment or QA.

CoCo achieves a significant improvement against the existing automatic metrics for text summarization in terms of the correlation with human annotations.

Details

The intuition is that when texts are generated more relying on the source document rather than the language prior, they should be more likely to be factually consistent w.r.t. the source documents.

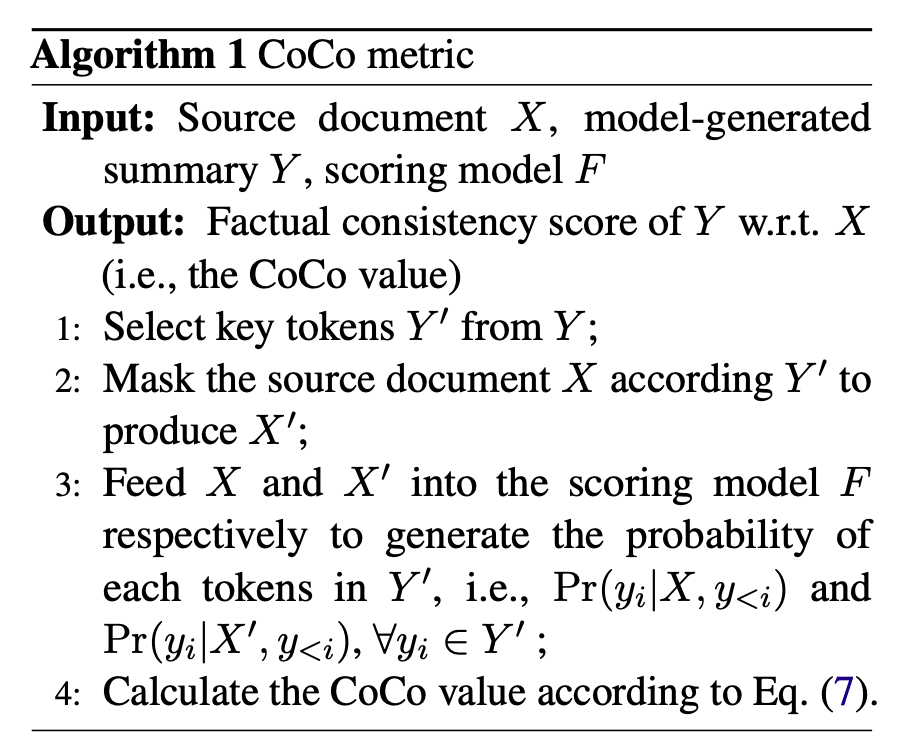

Algorithm

$$ \mathrm{CoCo}=\frac{1}{\left|Y^{\prime}\right|} \sum_{y_{t} \in Y^{\prime}} \operatorname{Pr}\left(y_{t} \mid X, y_{<t}\right)-\operatorname{Pr}\left(y_{t} \mid X^{\prime}, y_{<t}\right) $$

- Scoring Model F. Adopt an independent summarization model BART as the scoring model in the instantiation of CoCo (since the factual consistency can be biased by the model that produced this evaluated summary). The decoding steps use teach forcing (apparently).

- Key tokens. Only count the probability of key tokens (denoted as Y') in the evaluated summary. The criteria of selecting key tokens can be task-oriented designed.

- Mask. Experiments show that span-level and sentence-level mask (containing key tokens) are more efficient; Empty input (pure LM) could make the summarization model fall into an ill-posed state.

Comments

It’s capable at identifying extrinsic hallucinations by relying more on the source but may fail at intrinsic ones.